Langtrace adds support for Guardrails AI

Karthik Kalyanaraman

⸱

Cofounder and CTO

Nov 13, 2024

Introduction

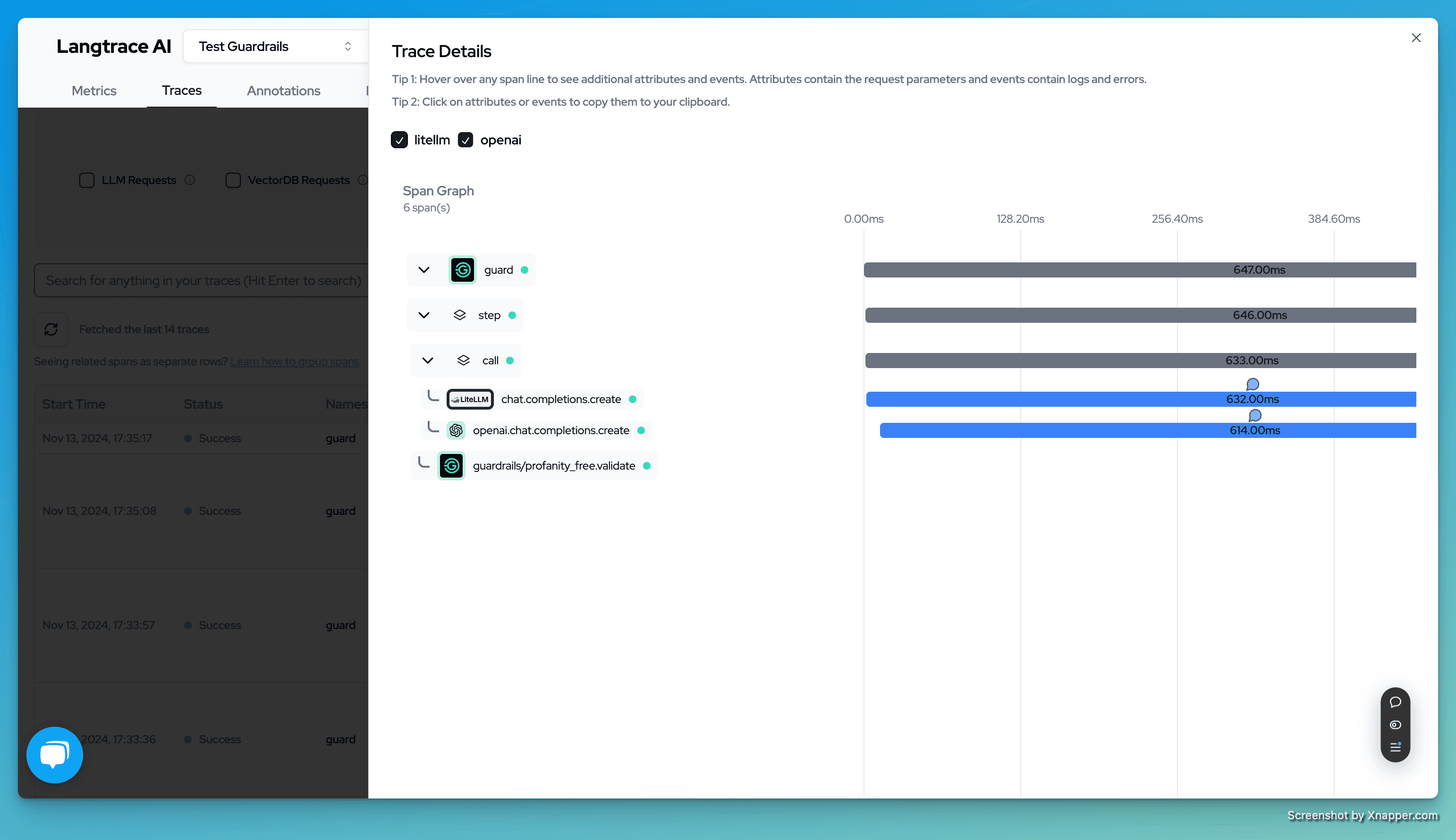

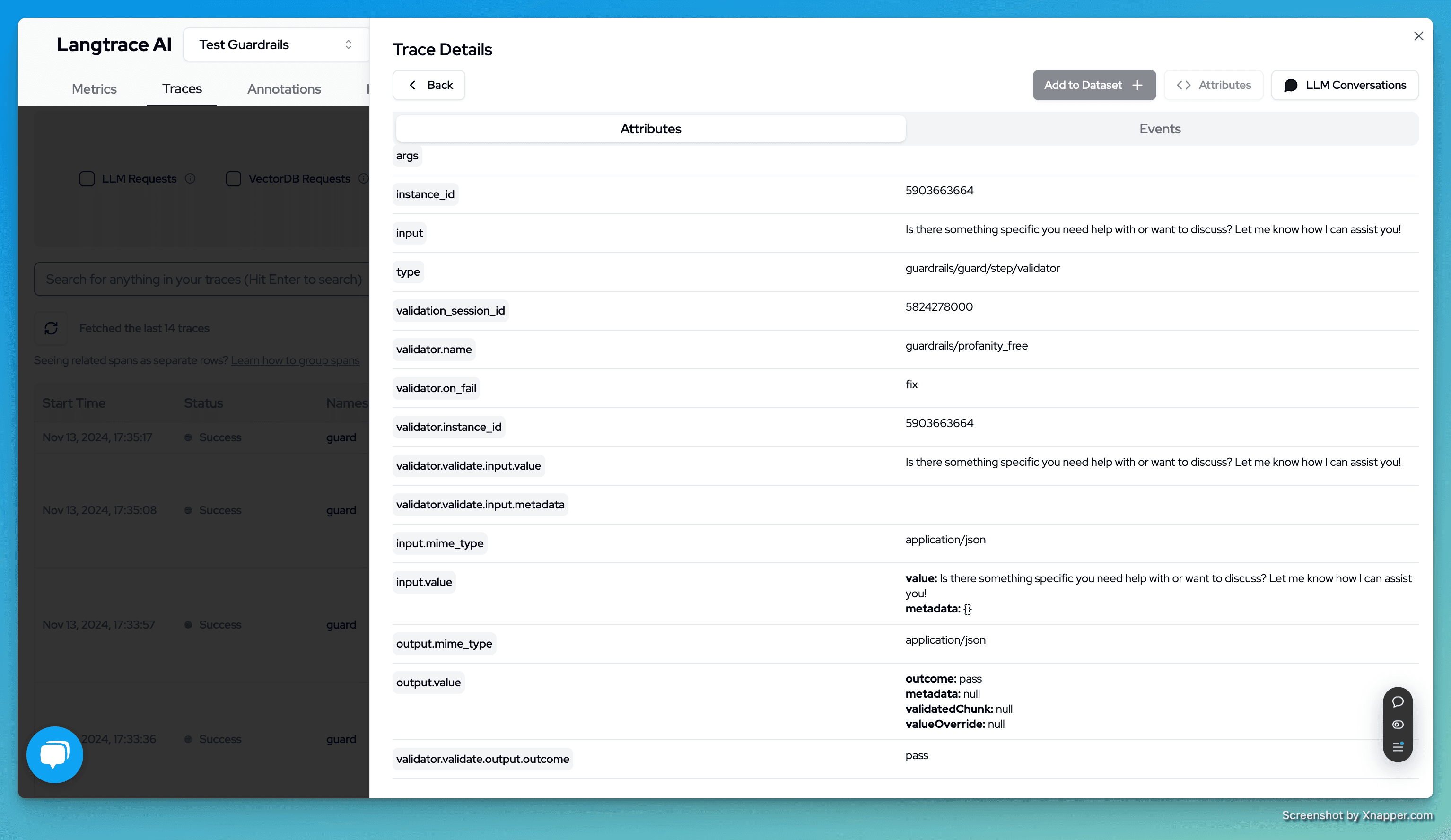

We are excited to announce that Langtrace now supports tracing Guardrails AI natively. This means Langtrace will automatically capture traces from guardrails with useful information related to the validators and validator metadata and surface it on Langtrace. This helps you get visibility into your models performance based on the validators you are using and the corresponding hits Guardrails is capturing.

Setup

Sign up to Langtrace, create a project and get a Langtrace API key

Install Langtrace SDK

Setup the .env var

Initialize Langtrace in your code

See the traces in Langtrace

Useful Resources

Getting started with Langtrace https://docs.langtrace.ai/introduction

Langtrace Website https://langtrace.ai/

Langtrace Discord https://discord.langtrace.ai/

Langtrace Github https://github.com/Scale3-Labs/langtrace

Langtrace Twitter(X) https://x.com/langtrace_ai

Langtrace Linkedin https://www.linkedin.com/company/langtrace/about/

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers