Integrating Langtrace with SigNoz

Karthik Kalyanaraman

⸱

Cofounder & CTO

Apr 10, 2024

Introduction

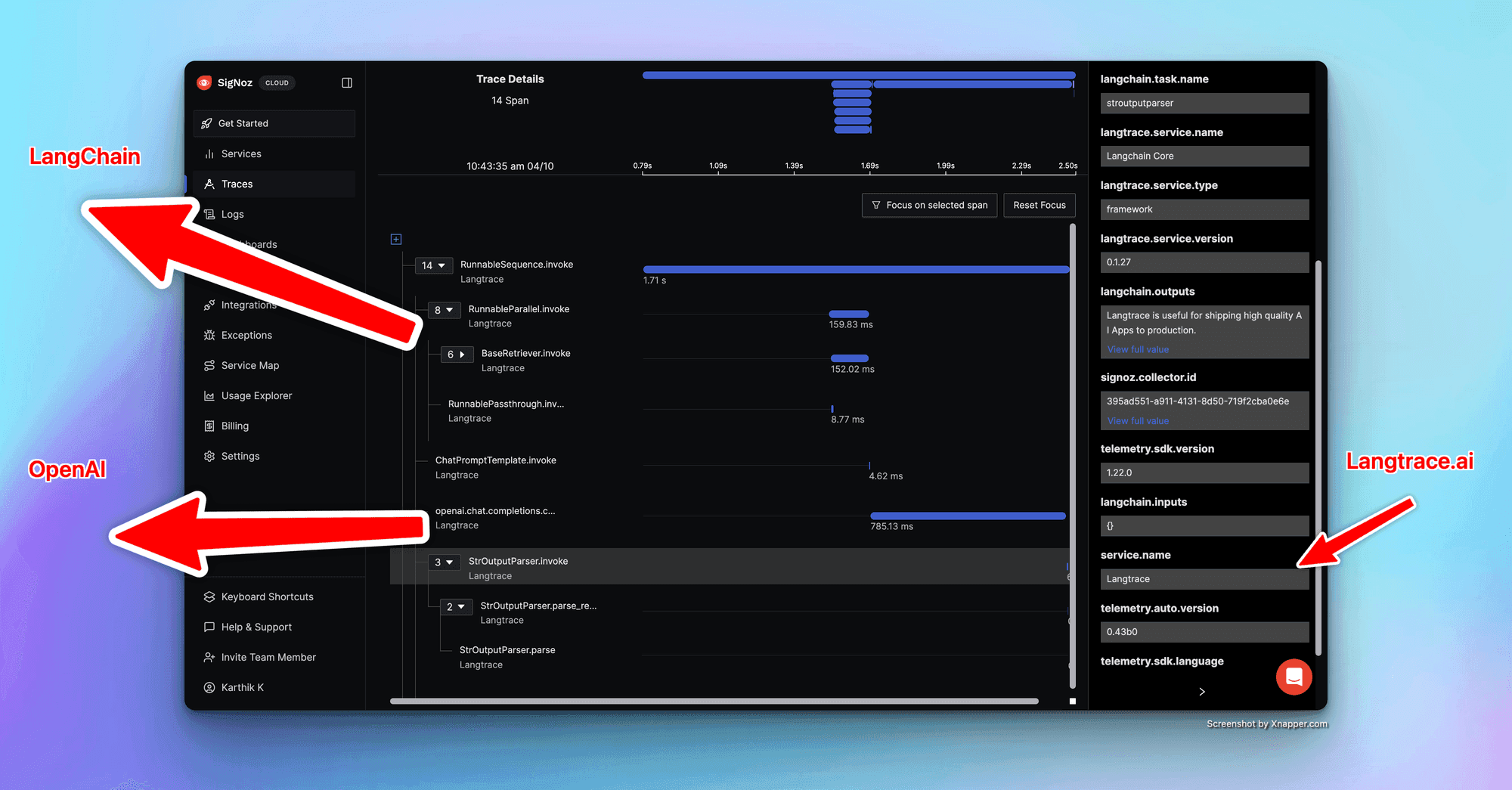

Are you concerned about the reliability and accuracy of responses from your RAG based ChatBot built using LangChain? In this post, we will explain how you can integrate Langtrace with SigNoz to ship traces from your LLM applications.

Steps

The setup is simple:

Install langtrace.ai's python SDK - pip install langtrace-python-sdk

Initialize the SDK in your code in just 1 line of code.

Sign up and start visualizing the traces on SigNoz

And the best part,

Both Langtrace and SigNoz are fully open source and support open telemetry out of the box. Here's a quick experiment I did for a FastAPI based RAG endpoint built using LangChain.

You can find the sample code in this link:

https://gist.github.com/karthikscale3/c13a5b7c53820ee297badd3acaa26d58

Sign up to SigNoz

Set up Langtrace SDK in your code - see line 11 (Just 1 line of code)

Get the SigNoz OTLP endpoint and OTLP header to start sending traces to SigNoz cloud

Run the server with the following code.

OTEL_RESOURCE_ATTRIBUTES=service.name=Langtrace \ OTEL_EXPORTER_OTLP_ENDPOINT="<SIGNOZE_OTLP_ENDPOINT>" \ OTEL_EXPORTER_OTLP_HEADERS="<SIGNOZ_OTLP_HEADER>" \ OTEL_EXPORTER_OTLP_PROTOCOL=grpc \ opentelemetry-instrument uvicorn main:app

That's it! ✨ 🧙♂️ Enjoy the high cardinality traces on SigNoz. 👇

Links

SigNoz - https://signoz.io/

Langtrace - https://langtrace.ai/

Langtrace SDK - https://pypi.org/project/langtrace-python-sdk/

Ready to deploy?

Try out the Langtrace SDK with just 2 lines of code.

Want to learn more?

Check out our documentation to learn more about how langtrace works

Join the Community

Check out our Discord community to ask questions and meet customers